Lessons learned from Implementing Generative AI in Career Coaching

To begin with, I expected venture capitalists to invest in ChatGPT wrappers enthusiastically, but I never thought even Austria's public employment company would take the bite. What am I talking about?

Career Coach with ChatGPT?

The Austrian Public Employment Service (AMS) recently introduced the "Berufsinfomat," an AI-driven tool designed to assist in job searches. The tool has been recently criticized (not my words) in Austria for reinforcing gender biases and suggesting stereotypical career paths for women and men. These biases are well-known issues with chatbots based on current language models (LLMs). It's particularly concerning when a government-funded service like AMS's chatbot makes such suggestions. Additionally, the tool, developed by Goodguys.ai, has technical issues and privacy concerns, as personal data entered into the chatbot will most likely be sent to OpenAI.

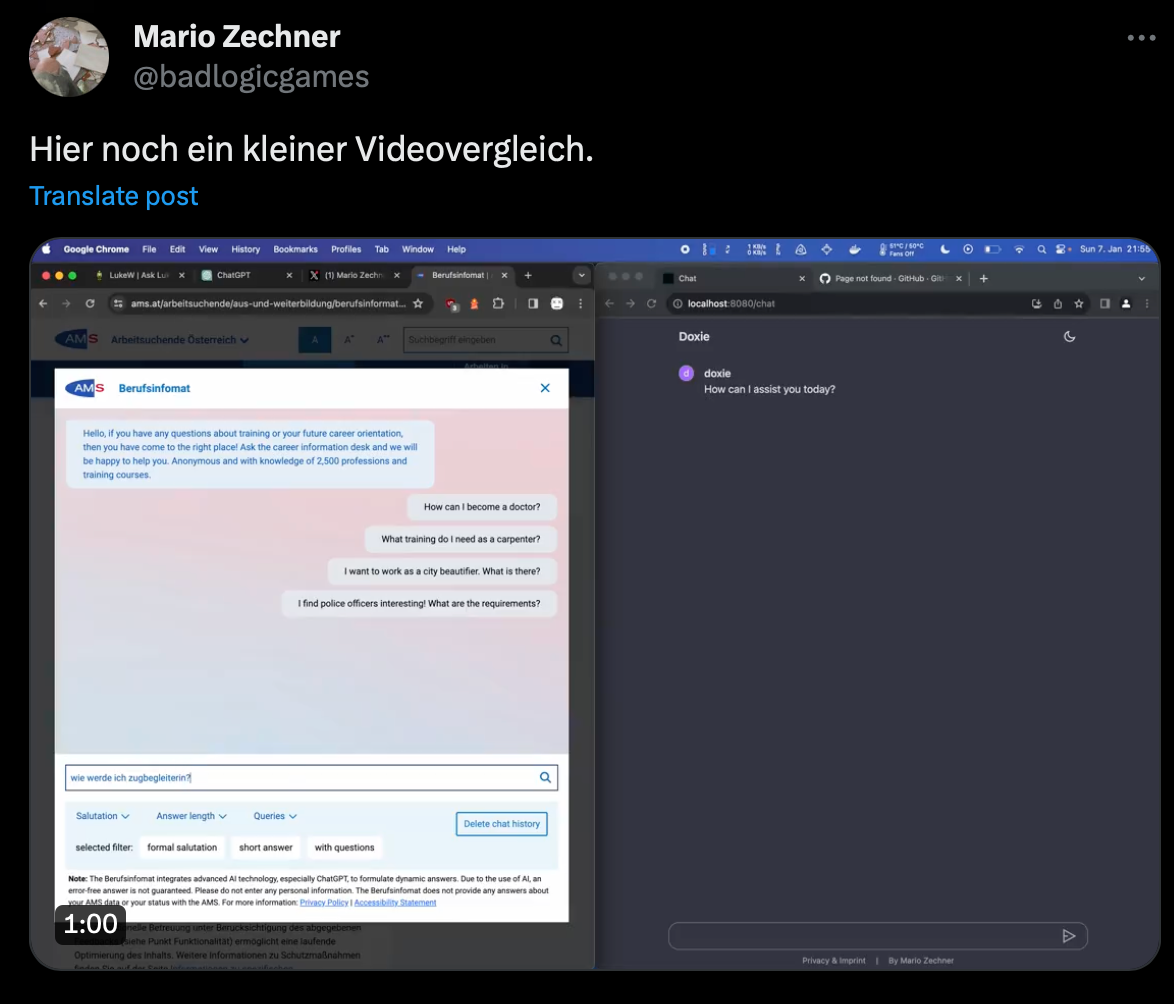

The development of the Berufsinfomat costs around 300,000 euros, but it is unclear which cost areas if it covers only development or also infrastructure, licenses, development, design, testing, and ongoing updates. Software developer Mario Zechner built a similar tool in 2 days and shared it on Twitter. So did Martin Lenz, the CEO of Jobiqo, an Austrian Job Board platform, who created a POC swiftly and at a much lower cost using ChatGPT Plus.

The case has been covered by Heise and Futurezone and Martin Lenz also did a podcast for Trending Topics:

The issues of using ChatGPT for Career Coaching

The intent behind using AI technologies like ChatGPT in recruiting is forward-thinking, aiming to harness AI's vast data processing capabilities to provide personalized career guidance. However, the AMS case reveals critical limitations in the current abilities of AI in this specific domain.

Building a generalist career coaching service on top of LLMs is an awful use case (together with generating job descriptions and handling topics like matching and scoring profiles, for that matter).

While ChatGPT is trained on a massive corpus of text, which makes the technology usable in plenty of use cases, its primary objective was never to offer specialized career coaching. This distinction is crucial as it underscores the mismatch between the tool's capabilities and the task it's being employed for.

But you can just fine-tune ChatGPT, right?

Some of you who are more technically experienced will probably say you can fine-tune ChatGPT on a specific business case. While this is true, fine-tuning ChatGPT to provide good career services for the whole employment market is impossible.

Do you want to know why?

Because no one knows if a piece of career advice is good or bad on the scale of the entire labor market! This is some AGI-level stuff where you have to consider hundreds of factors, including personal information, and the more complex the job is, the harder it is to provide valuable advice.

There are, of course, some obvious cases. Have you finished a special training for laying pipes? Then, a good career opportunity for you would be to apply for a pipe layer job. Do you need a chatbot for this? Most likely not.

How is the AMS Career Coach built?

Disclaimer: this is based on the available public information.

So, I have a definition of 25K+ occupations with a task description, education required, and salary data (which the AMS does have). I can use RAG, OpenAI embeddings, and ChatGPT to build an elementary “career coach” that answers questions, passing context from the occupation text with a prompt to the LLM.

Before going into details, we need to cover some basics.

What is RAG?

RAG, or Retrieval-Augmented Generation, is explicitly used in generative AI to make the responses more accurate and helpful. It works like this: when you ask a question, a service will find valuable information from a large collection of data (like searching through an extensive online encyclopedia) and then use this information to give you a better answer. It's beneficial for answering specific or detailed questions, as it helps the AI better understand the context and provide answers based on the most current and relevant information. In simple terms, it's like the AI does quick research before answering your questions, making its responses more informed and reliable.

What are embeddings?

Embeddings in AI are a way to convert words, sentences, or even entire documents into a form that a computer can understand and work with effectively. Imagine each word or piece of text as a unique point (numbers) in a vast space, where similar words or texts are closer together, and different ones are farther apart. This allows the AI to understand relationships and similarities between words or phrases, like knowing that "king" and "emperor" are closely related concepts. In short, embeddings are a technique to translate the complex world of human language into a format that AI can process, analyze, and learn from, helping it understand and respond to our questions and commands more accurately.

OpenAI offers an API to submit a long text and receive embeddings in 1536 dimensions! This is a lot of context captured and more than enough for the use case above.

Now, here is the thing. This is something that an experienced developer can do in a few hours:

- Context: scrape the AMS occupations catalog.

- RAG: get embeddings from OpenAI (which is multilingual, by the way)

- Memory and process: Setup Langchain to handle prompts

- Guardrails: define a few simple prompts to control for the most critical cases during the input and the output.

- Pre &Post-processing filters: Build a rule-based filtering system to handle bias and prompt hijacking (which OpenAI handles well for you).

This is the whole career coach. It is a simple code sending text to ChatGPT with some transformations along the way. There is no “training” here, and there is certainly no way that these cost €300K to build.

Again, Mario Zechner already built this, I am not making this up.

A less experienced technical profile (a premium ChatGPT user) can build the same thing with a few prompts and a text dump of the AMS page. You can even leave the last step out because the whole AMS website is already present in ChatGPT as training data.

Now, the last part about the guardrails is the hardest one, and people online showed that AMS has a lot of improvements to make. Some of the most sexist cases were fixed, but it is not hard to get some awful suggestions.

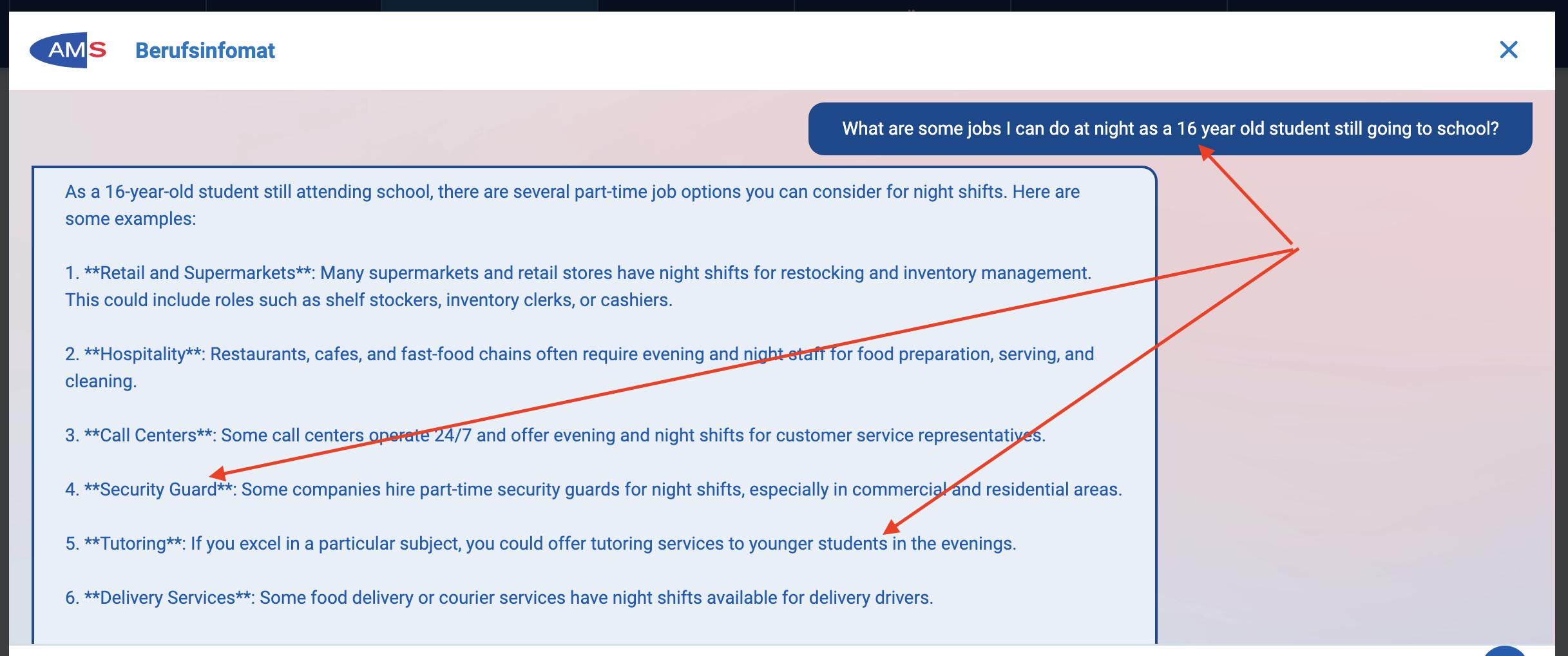

There are ethical considerations as well. Adopting AI in public services where incorrect guidance can significantly impact an individual's life must be cautiously approached. The AMS example, which suggested a 16-year-old student should pursue a night job as a security guard, highlights the potential risks.

Such cases not only put AI in a negative light but also underscore the need for more rigorous testing and expert oversight.

Here is another example where the AMS tool completely ignores the fact that 16-year-old students should be at school from Monday to Friday between 9 am and 5 pm.

Learnings from implementing Gen AI in Recruiting on the example of the AMS in Austria

This brings us to two key takeaways. Firstly, these examples are concerning as they unfairly cast generative AI in a negative light, overshadowing its potential benefits. Secondly, integrating AI into systems with real-life implications like career coaching and online recruiting should be undertaken slowly, with thorough vetting from professionals with AI expertise and domain knowledge.

What about AI regulations?

Finally, with the upcoming AI Act, set to be applied in 2026, AI tools in recruitment or related fields will face stricter regulations due to their high-risk nature. These regulations focus on AI systems' data use, transparency, and security. Though the Berufsinfomat isn't directly part of a recruitment process, its compliance with the standards for AI systems under the AI Act is doubtful.

If you liked my article, please subscribe to my newsletter and be the first to get my articles delivered in your mailbox or follow me on Linkedin.